In this article, we will explore a complete guide to reading CSV Files from S3 using Python with the help of some popular Python libraries.

As we know that AWS S3 is one of the most popular cloud storage in order to store structured and nonstructured data, That’s why a developer must have the skills to read data from CSV files using Python. Throughout this article, we will focus on only CSV ( Comma-separated Value ), and in a later article, we will another file format.

After reading the data from the CSV file from S3, You can store that data in another location which means it can be another file format, like CSV, JSON, etc or it can be any database like MySQL, PostgreSQL, SQL Server, etc.

Throughout this article, we will see three ways to read CSV files from S3 using Python programming.

Prerequisites:

To access the CSV file, you must have an AWS IAM user with programmatic access permission and the access key and secret key of that IAM user. If you want to read from your own AWS account then you will have to create an IAM user with programmatic access or if you want to read from your client or company then you can take the access key and secret key from them.

After getting the access key and secret key, you have to configure those access keys and secret keys in your machine. To set up the access key and secret key you need to install the AWS CLI ( Command Line Interface ) command line tool on your machine.

Don’t worry, I have already written articles on downloading and installing AWS CLI and how to create AWS IAM users with programmatic access.

I would highly recommend you, Pls read the below articles one by one.

- How to setup AWS IAM user with programmatic access

- How to install and configure AWS CLI in Windows 11

After configuring the AWS CLI in your machine, You can make requests with AWS services.

Let’s see all the ways to read CSV files from S3 using Python programming.

Headings of Contents

S3 CSV File

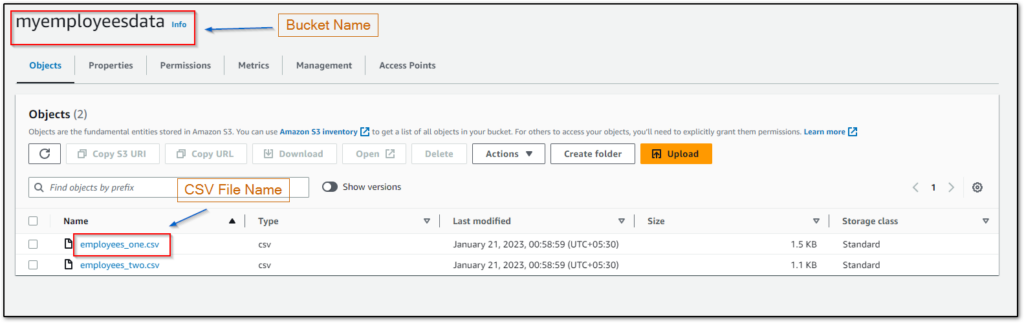

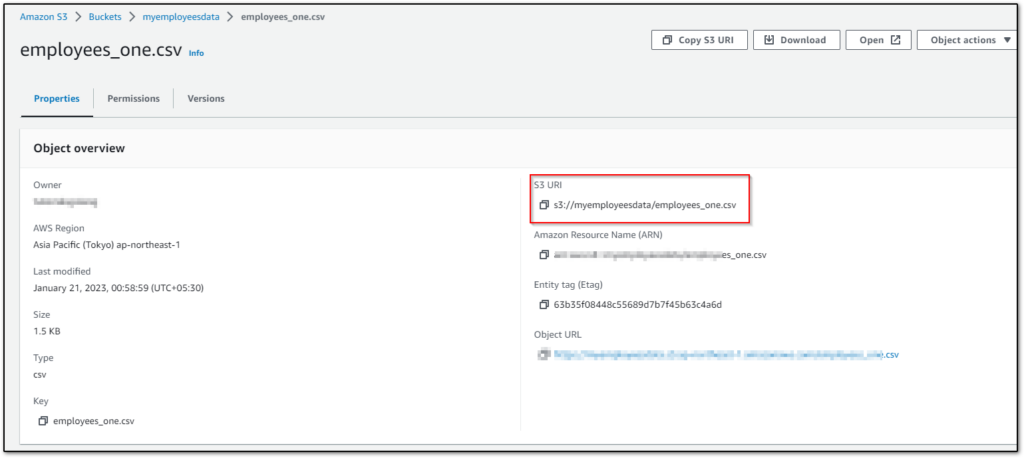

In the AWS account, I have a bucket named myemployeesdata have two CSV files but we will read data from only the employees_one.csv file. The process will be the same for all the CSV files.

As you can see in the below screenshot.

Read CSV file from S3 Using Python Boto3

Boto3 is a library that is written in Python programming language. It is one of the most popular and most used libraries to access and manage AWS services. It is also recommended by AWS itself.

Boto3 library has numerous features to access and manage any AWS Services in a Pythonic way.

To use this library you will have to install it by using the pip command.

pip install boto3

Let’s see how can we read CSV.

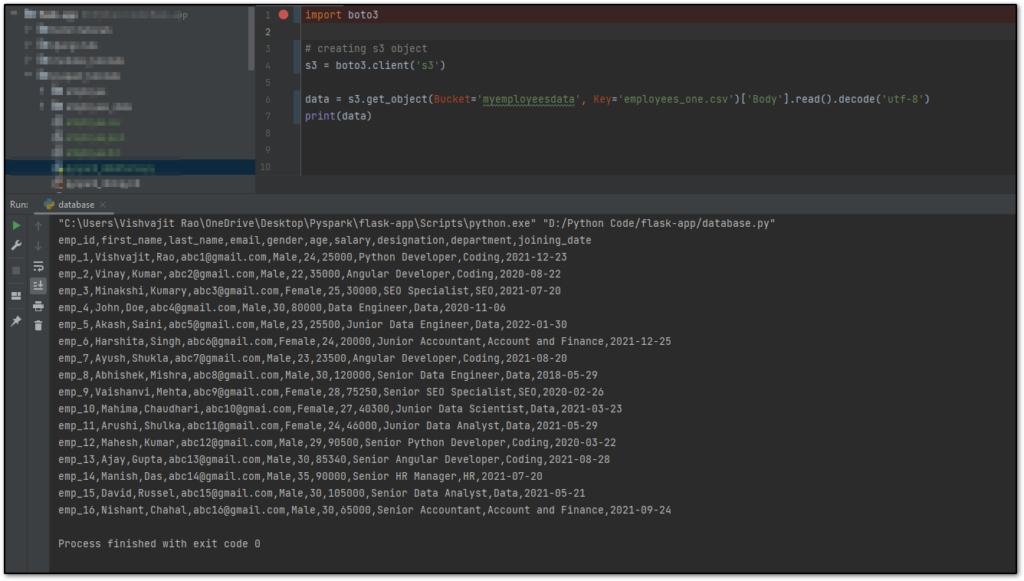

import boto3

# creating s3 client

s3 = boto3.client('s3')

# reading data from employees_one.csv file

data = s3.get_object(Bucket='myemployeesdata', Key='employees_one.csv')['Body'].read().decode('utf-8')

print(data)

Explaining the above code:

- First importing the boto3 library.

- Second, Created a S3 client to make AWS S3 requests.

- Third, Read data from employees_one.csv file inside the myemployeesdata bucket.

- Fourth, I have printed the data.

As you can see I have mentioned the value of the Key parameter hardcoded but you can get it from the below code.

object = s3.list_objects(Bucket='myemployeesdata').get("Contents")[0].get("Key")

And then pass an object to the Key parameter inside the get_object() method.

As you can see above, I have written and executed the same code in my PyCharm IDE but there is a problem, CSV data is returned as a string that’s why it will be more difficult to apply any operations on that data because in data, first line represents the header, second line represents the information of a particular employee and so on.

Therefore, we cannot get iterate each line individually so that we can perform any operation. To fix the problem we will have to change a little bit in our code. Here I am about to convert a list of dictionaries and each dictionary will represent the particular employee’s information.

let’s see how.

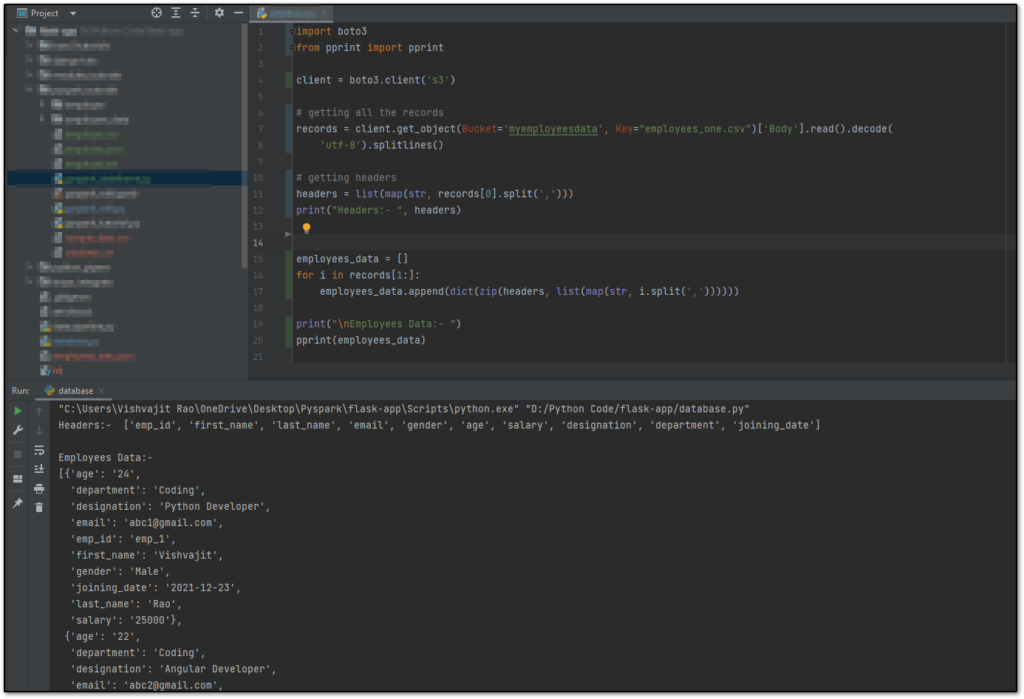

import boto3

from pprint import pprint

client = boto3.client('s3')

# getting all the records

records = client.get_object(Bucket='myemployeesdata', Key="employees_one.csv")['Body'].read().decode(

'utf-8').splitlines()

# getting headers

headers = list(map(str, records[0].split(',')))

print("Headers:- ", headers)

employees_data = []

for i in records[1:]:

employees_data.append(dict(zip(headers, list(map(str, i.split(','))))))

print("\nEmployees Data:- ")

pprint(employees_data)

Explaining the above code:

- Imported boto3 library.

- Imported the pprint from pprint module which is used for pretty print. It is a built-in module.

- Creating an S3 client to interact with AWS S3.

- Using S3 client get_object() method to get all the data from CSV file employees_one.csv.

- Getting headers from data because in CSV file first line represent the headers.

- Declare a blank Python list to append the dictionary.

- Iterate each line from the second line from the records list variable. Here I am doing multiple things. Le’s see what.

- Using i.split(‘,’) I have split each item with a comma. It will return a list.

- Passing i.split(‘,’) to the map() function as the second parameter and the first parameter will be str because I am converting each item of the list to a string. It will return a map object.

- Using list() function to convert map object to list.

- Using the zip() function. The zip function takes two argument headers and converts the map object to a list and finally paired corresponding items of both lists together. It will return a zip object.

- And finally, convert the zip object to a Python dictionary and append it to the employees_data list.

- In the end, I have displayed both headers and employees_data values.

Note:- If you have confusion regarding map(), zip(), dict(), and list() functions, Then you can follow the below tutorials.

As you can see I have executed the same code in my IDE.

So this is how you can use the boto3 library to read CSV files from S3 using Python.

Now let’s see another way to read CSV files.

👉Python boto3 Library:- Click Here

Read CSV file from S3 Using Python smart_open:

The smart_open is a third-party Python library that is used to read data from S3 files. Apart from S3, you can read data from multiple sources using smart_open that’s why it is called smart open. We can directly iterate each line of the CSV file using this module.

To use this, you will have to install it by using the Python pip command.

pip install smart_open

There are various functions have been written in the smart_open library which you can use, one of them is smart_open() which we are about to use here.

The smart_open() function takes multiple parameters but here we will use only three parameters uri, mode, and encoding. The URI parameter represents the URI of the S3 file, the mode represents the mode of the open file, and the encoding parameter is the encoding type of the CSV file.

To get the URI of the S3 file, click on the particular file and now you will get the URI. As you can see in the below screenshot. I have shown you the URI (Uniform Resource Identifier) of the employees_one.csv file.

Now let’s write a simple code to read CSV files from S3 using Python smart open library.

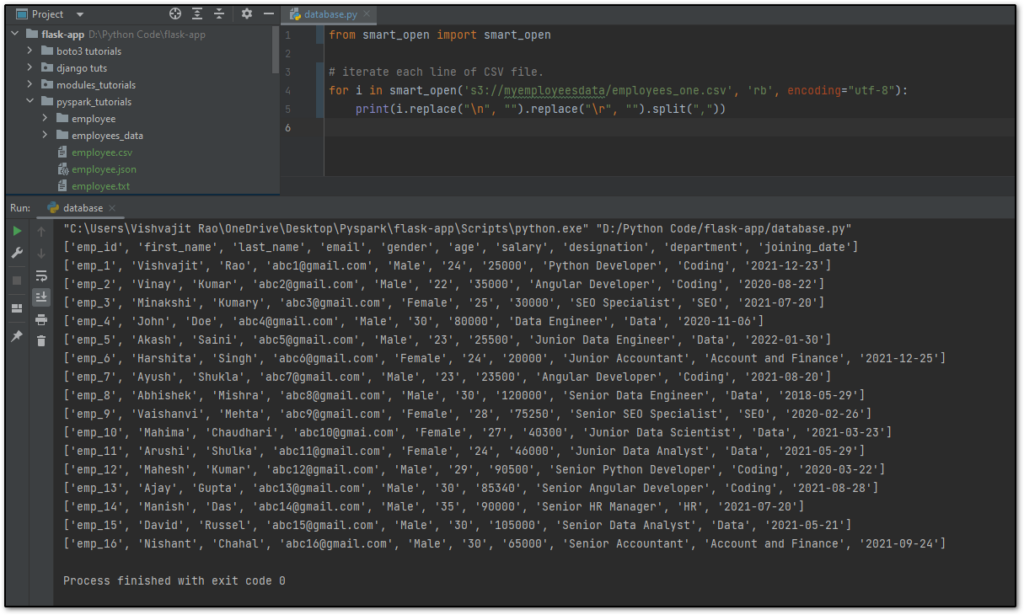

from smart_open import smart_open

# iterate each line of CSV file.

for i in smart_open('s3://myemployeesdata/employees_one.csv', 'rb', encoding="utf-8"):

print(i.replace("\n", "").replace("\r", "").split(","))

Explaining the code:

- Imported the smart_open function from the smart_open module.

- Passed URI, mode, and encoding type in smart_open() function and iterate each line of the employees_one.csv file.

You can see the same code in My IDE also.

👉Python smart_open() Library:- Click Here

Conclusion

So, I hope the process of the read CSV files from s3 using Python was easy and understandable. As Developer, Data Engineers, Data Analysts, and Data Scientists, we have a requirement to extract data from an S3 CSV file and store it in some other file format or any other database table, Then we can use any library between boto3 and smart)open to extract data from s3 CSV file.

In upcoming tutorials, we will see how we can store that in other file formats or in database tables.

If you found this article helpful, please share and keep visiting for further tutorials.

Thanks for taking the time to read this article….

Happy Coding…