Hi PySpark lovers, In this article, you will learn all about PySpark SQL string functions with the help of the example. PySpark provides numerous string functions in order to work with string values or string columns in PySpark DataFrame.

Throughout this article, we are about to explore commonly useful PySpark string functions with the help of examples so that next time, you can easily work with any string values in PySpark DataFrame.

Of course, To work with string functions in PySpark, we must have a PySpark DataFrame. For a demonstration of this article, I have created a sample PySpark DataFrame having some records. We will use this PySpark DataFrame throughout this guide.

Prerequisites

To work with PySpark DataFrame, You must have knowledge of Python. You can get a complete Python tutorial here.

Headings of Contents

- 1 Creating PySpark DataFrame

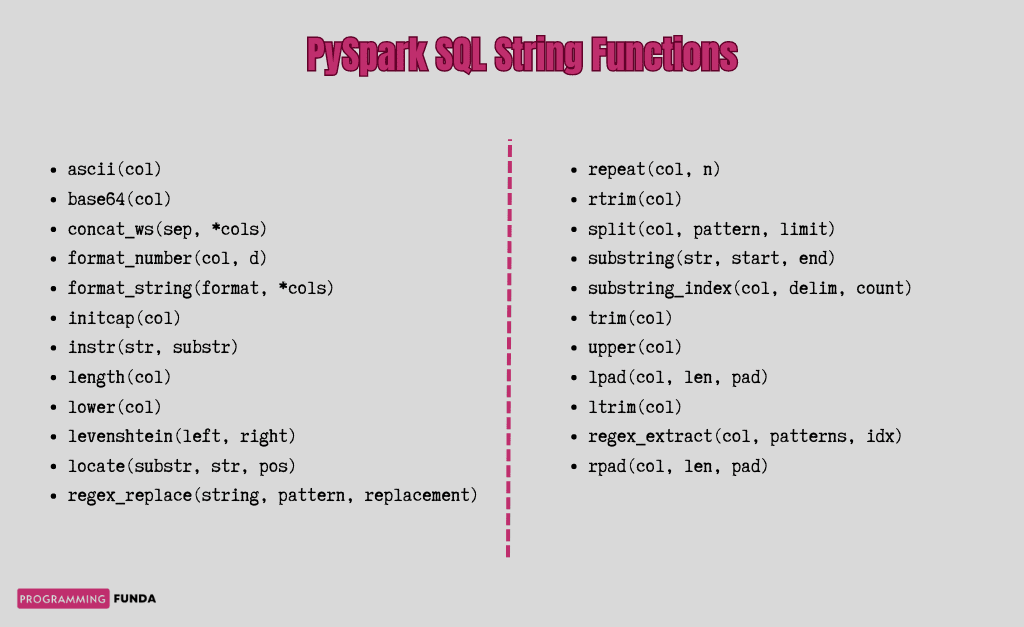

- 2 PySpark SQL String Functions

- 2.1 ascii()

- 2.2 base64(col)

- 2.3 concat_ws(sep, *col)

- 2.4 format_number(col, d)

- 2.5 format_string(format, *col)

- 2.6 initcap(col)

- 2.7 intstr(str, substr)

- 2.8 length(col)

- 2.9 lower(col)

- 2.10 levenshtein(left, right)

- 2.11 locate(substr, str, pos)

- 2.12 lpad(col, len, pad)

- 2.13 ltrim(col)

- 2.14 regex_extract(col, patterns, idx)

- 2.15 regex_replace(string, pattern, replacement)

- 2.16 rpad(col, len, pad)

- 2.17 repeat(col, n)

- 2.18 rtrim(col)

- 2.19 split(col, pattern, limit)

- 2.20 substring(str, start, end)

- 2.21 substring_index(ccol, delim, count)

- 2.22 trim(col)

- 2.23 upper(col)

- 3 Conclusion

Creating PySpark DataFrame

You can use the following code in order to create PySpark DataFrame.You can omit this part If you have already created PySpark DataFrame.

from pyspark.sql import SparkSession

# list of tuples

data = [

('E1001', 'Vishvajit', 'Rao', 40000, 'IT', '2023-01-12'),

('E1002', 'Harsh', 'Goal', 35000,'Finance', '2021-12-10'),

('E1003', 'Pankaj', 'Singh', 30000, 'IT', '2020-06-29'),

('E1004', 'Pranjal', 'Rao', 18000, 'HR', '2020-11-20'),

('E1005', 'Ritika', 'Kumari', 23000, 'IT', '2019-11-26'),

('E1006', 'Diyanshu', 'Saini', 13000, 'Account', '2023-01-01'),

('E1007', 'Pratiksha', 'Kaushik', 30000, 'HR', '2018-07-10'),

('E1008', 'Shailja', 'Srivastava', 30000, 'Account', '2020-11-22'),

('E1009', 'Vaibhav', 'Pathak', 32000, 'IT', '2022-09-10'),

('E10010', 'Harnoor', 'Singh', 50000, 'IT', '2022-05-23'),

('E10011', 'Vaishali', 'Mehta', 40000, 'Account', '2019-04-29'),

]

# column

column = ['emp_id', 'first_name', 'last_name', 'salary', 'department', 'date_of_joining']

# creating spark session

spark = SparkSession.builder.appName("www.programmingfunda.com").getOrCreate()

# creating DataFrame

df = spark.createDataFrame(data, column)

df.show()

After executing the above code, The DataFrame will be.

+------+----------+----------+------+----------+---------------+

|emp_id|first_name| last_name|salary|department|date_of_joining|

+------+----------+----------+------+----------+---------------+

| E1001| Vishvajit| Rao| 40000| IT| 2023-01-12|

| E1002| Harsh| Goal| 35000| Finance| 2021-12-10|

| E1003| Pankaj| Singh| 30000| IT| 2020-06-29|

| E1004| Pranjal| Rao| 18000| HR| 2020-11-20|

| E1005| Ritika| Kumari| 23000| IT| 2019-11-26|

| E1006| Diyanshu| Saini| 13000| Account| 2023-01-01|

| E1007| Pratiksha| Kaushik| 30000| HR| 2018-07-10|

| E1008| Shailja|Srivastava| 30000| Account| 2020-11-22|

| E1009| Vaibhav| Pathak| 32000| IT| 2022-09-10|

|E10010| Harnoor| Singh| 50000| IT| 2022-05-23|

|E10011| Vaishali| Mehta| 40000| Account| 2019-04-29|

+------+----------+----------+------+----------+---------------+Code Explanation:

Let me explain the above code so that you can get more understanding of what’s going on in this code.

- First, I imported the SparkSession class from the pyspark.sql package.

- Second, I have defined a list of tuples and each tuple represents some information about the employee like emp_id, first_name, last_name, salary, department, and date_of_joining.

- Third, I have defined a Python list that contained column names.

- Fourth, I have created a spark session using SparkSession.builder.appName(“www.programmingfunda.com”).getOrCreate(). Where

- SparkSession represents the class name defined inside the pyspark.sql package.

- builder is the attribute of the SparkSession class which has a Builder class to initiate the spark session.

- appName() is a method that is used to provide the name of the spark application, In my case the application name is www.programmingfunda.com.

- getOrCreate() is also a method that is used to get the existing spark session or create a new spark session if the spark session is not available.

- Fifth, I have used the createDataFrame() method in order to create a new PySpark DataFrame. I have passed the two arguments in createDataFrame(), First is a list of tuples, second is column names as a list. The createDataFrame() method returns a DataFrame.

- Used the DataFrame show() method that is used to show the DataFrame.

I hope you have understood the whole code with the help of the above steps. Now it’s time to explore PySpark SQL string functions with the help of the examples.

PySpark SQL String Functions

Remember, PySpark string functions will only apply to string values not others like numbers, floats, lists, etc. We will use mostly the above-created DataFrame in order to apply PySpark string functions.

Let’s start.

ascii()

PySpark string ascii() function takes the single column name as a parameter and returns the ASCII value of the first characters of the passed column value. To use ascii() function, you will have to import it from pyspark.sql.functions module.

Example:

In this, I have applied the ascii() function on top of the first_name column to get the ASCII value of the first character of the first_name column.

from pyspark.sql.functions import ascii

# ascii

df = df.select(ascii('first_name'))

# displaying

df.show()

The output will be:

+-----------------+

|ascii(first_name)|

+-----------------+

| 86|

| 72|

| 80|

| 80|

| 82|

| 68|

| 80|

| 83|

| 86|

| 72|

| 86|

+-----------------+base64(col)

This function is used to encode the base64 encoding for binary column and returns the value as a string. The base64() function takes a column name as a parameter.

Example

In this example, I have applied the base64() function for the last_name column.

# base64

df = df.select(base64('last_name'))

# displaying

df.show()

Output

+-----------------+

|base64(last_name)|

+-----------------+

| UmFv|

| R29hbA==|

| U2luZ2g=|

| UmFv|

| S3VtYXJp|

| U2Fpbmk=|

| S2F1c2hpaw==|

| U3JpdmFzdGF2YQ==|

| UGF0aGFr|

| U2luZ2g=|

| TWVodGE=|

+-----------------+concat_ws(sep, *col)

The concate_ws() function is used to concatenate the multiple input string together with the passed separator and return a single value as a string. This function takes the separator as the first argument and more than one column in order to concatenate them.

As we have seen, We have the first_name and last_name columns in the above-crated PySpark DataFrame.Now I am about to make a new column called full_name with the concatenation of first_name and last_name.

from pyspark.sql.functions import concat_ws

# using concat_ws

df = df.select(concat_ws(' ', 'first_name', 'last_name').alias('full_name'))

# displaying

df.show()

Output

+------------------+

| full_name|

+------------------+

| Vishvajit Rao|

| Harsh Goal|

| Pankaj Singh|

| Pranjal Rao|

| Ritika Kumari|

| Diyanshu Saini|

| Pratiksha Kaushik|

|Shailja Srivastava|

| Vaibhav Pathak|

| Harnoor Singh|

| Vaishali Mehta|

+------------------+format_number(col, d)

This function is used to format the numeric column x to format like ‘#,-#, -#.#-‘, rounded to d decimal places with HALF_EVEN rounded mode, and return the result as a string.

Example

I have applied the format_number() function to the salary column of PySpark DataFrame.

from pyspark.sql.functions import format_number

# using concat_ws

df = df.select(format_number('salary', 2).alias('format_number'))

# displaying

df.show()

Output

+-------------+

|format_number|

+-------------+

| 40,000.00|

| 35,000.00|

| 30,000.00|

| 18,000.00|

| 23,000.00|

| 13,000.00|

| 30,000.00|

| 30,000.00|

| 32,000.00|

| 50,000.00|

| 40,000.00|

+-------------+format_string(format, *col)

This function is used to format the arguments in printf-style and return the result as a string.

Example

from pyspark.sql.functions import format_string

# using concat_ws

df = df.select(format_string('My first and last name is %s %s', 'first_name', 'last_name').alias('format_string'))

# displaying

df.show(truncate=False)

Output

+--------------------------------------------+

|format_string |

+--------------------------------------------+

|My first and last name is Vishvajit Rao |

|My first and last name is Harsh Goal |

|My first and last name is Pankaj Singh |

|My first and last name is Pranjal Rao |

|My first and last name is Ritika Kumari |

|My first and last name is Diyanshu Saini |

|My first and last name is Pratiksha Kaushik |

|My first and last name is Shailja Srivastava|

|My first and last name is Vaibhav Pathak |

|My first and last name is Harnoor Singh |

|My first and last name is Vaishali Mehta |

+--------------------------------------------+initcap(col)

The initcap() function is used to translate the first character of the passed column name in upper case and return the result as a string. It takes the column name as a parameter.

Example

from pyspark.sql.functions import initcap

# using concat_ws

df = df.select(initcap('first_name').alias('initcap'))

# displaying

df.show(truncate=False)

Output

+---------+

|initcap |

+---------+

|Vishvajit|

|Harsh |

|Pankaj |

|Pranjal |

|Ritika |

|Diyanshu |

|Pratiksha|

|Shailja |

|Vaibhav |

|Harnoor |

|Vaishali |

+---------+intstr(str, substr)

This function is used to return the position of the first occurrence of the passed substr in a given str column. It returns null if either argument is null.

Example

In this example section, I am about to find the position of the first occurrence of a character ‘i’ in the first_name column name.

from pyspark.sql.functions import instr

# using concat_ws

df = df.select(instr('first_name', 'i').alias('instr'))

# displaying

df.show(truncate=False)

Output

+-----+

|instr|

+-----+

|2 |

|0 |

|0 |

|0 |

|2 |

|2 |

|5 |

|4 |

|3 |

|0 |

|3 |

+-----+length(col)

The length(col) function is used to return the total length of the passed column name. Spaces will also be a part of the length.

from pyspark.sql.functions import length

# using concat_ws

df = df.select(length('first_name').alias('length'))

# displaying

df.show(truncate=False)

Output

+------+

|length|

+------+

|9 |

|5 |

|6 |

|7 |

|6 |

|8 |

|9 |

|7 |

|7 |

|7 |

|8 |

+------+lower(col)

It converts the passed column string value to lowercase characters and returns the result as a string also.

from pyspark.sql.functions import lower

# using concat_ws

df = df.select(lower('first_name').alias('lower'))

# displaying

df.show(truncate=False)

Output

+---------+

|lower |

+---------+

|vishvajit|

|harsh |

|pankaj |

|pranjal |

|ritika |

|diyanshu |

|pratiksha|

|shailja |

|vaibhav |

|harnoor |

|vaishali |

+---------+levenshtein(left, right)

The levenshtein() function is used to calculate the Levenshtein distance between two given strings. The levenshtein function takes two arguments that are left and right where left indicates the first string column and right indicates the second string column.

from pyspark.sql.functions import levenshtein

# using levenshtein

df = df.select(levenshtein('first_name', 'last_name').alias('levenshtein'))

# displaying

df.show(truncate=False)

Output

+-----------+

|levenshtein|

+-----------+

|8 |

|5 |

|5 |

|6 |

|6 |

|7 |

|7 |

|7 |

|4 |

|6 |

|7 |

+-----------+locate(substr, str, pos)

The locate() function is used to return the position of the first occurrence of the substr in the given string str name after the pos value. The pos parameter is Optional here.

Example

from pyspark.sql.functions import locate

# using locate

df = df.select(locate('i', 'first_name', 2).alias('locate'))

# displaying

df.show(truncate=False)

Output

+------+

|locate|

+------+

|2 |

|0 |

|0 |

|0 |

|2 |

|2 |

|5 |

|4 |

|3 |

|0 |

|3 |

+------+lpad(col, len, pad)

It is used to left pad the string column to len with the pad. It takes string column col, len, and pad as a parameter where col indicates the name of the column, len indicates the total length of the new value and pad indicates a character to be padded left.

from pyspark.sql.functions import lpad

# using lpad

df = df.select(lpad('first_name', 10, '#').alias('lpad'))

# displaying

df.show(truncate=False)

Output

+----------+

|lpad |

+----------+

|#Vishvajit|

|#####Harsh|

|####Pankaj|

|###Pranjal|

|####Ritika|

|##Diyanshu|

|#Pratiksha|

|###Shailja|

|###Vaibhav|

|###Harnoor|

|##Vaishali|

+----------+ltrim(col)

The ltrim() function is used to remove the spaces from the left of the passed string column name col.

Output

from pyspark.sql import SparkSession

from pyspark.sql.functions import ltrim

# creating spark session

spark = SparkSession.builder.appName("www.programmingfunda.com").getOrCreate()

# creating DataFrame

df = spark.createDataFrame(

data=[(" Python",), (" Java",), (" C++",), (" C",)], schema=["programming_language"]

)

print('DataFrame before applying ltrim(): ')

df.show()

print('DataFrame after applying ltrim(): ')

# using ltrim

df = df.select(ltrim('programming_language').alias('ltrim'))

# displaying

df.show()

Output

DataFrame before applying ltrim():

+--------------------+

|programming_language|

+--------------------+

| Python|

| Java|

| C++|

| C|

+--------------------+

DataFrame after applying ltrim():

+------+

| ltrim|

+------+

|Python|

| Java|

| C++|

| C|

+------+regex_extract(col, patterns, idx)

The regex_extract() function is used to extract the specified group matched from the specified column name col. It returns a Null value if any matches will not occur.

Example

In below

from pyspark.sql.functions import regex_extract

# using regex_extract

df = df.select(regexp_extract('salary', '(\d+)', 1).alias('regex_extract'))

# displaying

df.show()

Output

+-------------+

|regex_extract|

+-------------+

| 40000|

| 35000|

| 30000|

| 18000|

| 23000|

| 13000|

| 30000|

| 30000|

| 32000|

| 50000|

| 40000|

+-------------+regex_replace(string, pattern, replacement)

The regex_replace() function is used to replace the substring with a specified value after matching the specified regex format.

It takes string, pattern, and replacement as a parameter where the string indicates the name of the PySpark DataFrame column name, the pattern indicates the regex format to match from string, and the replacement indicates some value in order to replace when any matching will occur.

Suppose In the last_name column, I am about to replace the character ‘a‘ with an underscore (_) symbol.

Example

from pyspark.sql.functions import regexp_replace

# using regex_replace

df = df.select(regexp_replace('last_name', '[a]', '_').alias('regexp_replace'))

# displaying

df.show()

Output

+--------------+

|regexp_replace|

+--------------+

| R_o|

| Go_l|

| Singh|

| R_o|

| Kum_ri|

| S_ini|

| K_ushik|

| Sriv_st_v_|

| P_th_k|

| Singh|

| Meht_|

+--------------+rpad(col, len, pad)

The rpad() function is used to right-pad the string to the width len with the pad.

Example

from pyspark.sql.functions import rpad

# using rpad

df = df.select(rpad('last_name', 10, '*').alias('rpad'))

# displaying

df.show()

Output

+----------+

| rpad|

+----------+

|Rao*******|

|Goal******|

|Singh*****|

|Rao*******|

|Kumari****|

|Saini*****|

|Kaushik***|

|Srivastava|

|Pathak****|

|Singh*****|

|Mehta*****|

+----------+repeat(col, n)

The repeat() function is used to repeat the col with n number of times and returns a new string.

from pyspark.sql.functions import repeat

# using repeat

df = df.select(repeat('last_name', 2).alias('repeat'))

# displaying

df.show()

Output

+--------------------+

| repeat|

+--------------------+

| RaoRao|

| GoalGoal|

| SinghSingh|

| RaoRao|

| KumariKumari|

| SainiSaini|

| KaushikKaushik|

|SrivastavaSrivastava|

| PathakPathak|

| SinghSingh|

| MehtaMehta|

+--------------------+rtrim(col)

The rtrim() function is used to remove the spaces from the right side of the passed column name columnName.

Example

from pyspark.sql import SparkSession

from pyspark.sql.functions import rtrim

# creating spark session

spark = SparkSession.builder.appName("www.programmingfunda.com").getOrCreate()

# creating DataFrame

df = spark.createDataFrame(

data=[("Python ",), ("Java ",), ("C++ ",), ("C ",)], schema=["programming_language"]

)

print('DataFrame before applying rtrim(): ')

df.show()

print('DataFrame after applying rtrim(): ')

# using rtrim

df = df.select(rtrim('programming_language').alias('rtrim'))

# displaying

df.show()

Output

DataFrame before applying rtrim():

+--------------------+

|programming_language|

+--------------------+

| Python |

| Java |

| C++ |

| C |

+--------------------+

DataFrame after applying rtrim():

+------+

| rtrim|

+------+

|Python|

| Java|

| C++|

| C|

+------+split(col, pattern, limit)

The split() function is used to split the string with a given pattern. It returns a list of separated strings.

Example

In this example, I have split the first_name column with the character ‘i‘.

from pyspark.sql.functions import split

# using split

df = df.select(split('first_name', 'i').alias('split'))

# displaying

df.show()

Output

+-------------+

| split|

+-------------+

|[V, shvaj, t]|

| [Harsh]|

| [Pankaj]|

| [Pranjal]|

| [R, t, ka]|

| [D, yanshu]|

| [Prat, ksha]|

| [Sha, lja]|

| [Va, bhav]|

| [Harnoor]|

| [Va, shal, ]|

+-------------+substring(str, start, end)

The substring() function is used to extract the substring or subpart of the passed column name or string str. substring will start from the start position and end with the end position.

from pyspark.sql.functions import substring

# using substring

df = df.select(substring('first_name', 2, 6).alias('substring'))

# displaying

df.show()

Output

---------+

|substring|

+---------+

| ishvaj|

| arsh|

| ankaj|

| ranjal|

| itika|

| iyansh|

| ratiks|

| hailja|

| aibhav|

| arnoor|

| aishal|

+---------+substring_index(ccol, delim, count)

The substring_index(col, count, delim) function is used to fetch the substring from the given column columnName before count the occurrence of passed delim.

Example

from pyspark.sql import SparkSession

from pyspark.sql.functions import substring_index

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

df = spark.createDataFrame([('www#programmmingfunda#com',)], ['blog'])

# using substring

df = df.select(substring_index('blog', '#', 1).alias('substring'))

# displaying

df.show(truncate=False)

Output

+---------+

|substring|

+---------+

|www |

+---------+trim(col)

The trim() function is used to remove the spaces from the start and end of the passed column name.

Example

from pyspark.sql import SparkSession

from pyspark.sql.functions import trim

# creating spark session

spark = SparkSession.builder.appName("www.programmingfunda.com").getOrCreate()

# creating DataFrame

df = spark.createDataFrame(

data=[(" Python ",), (" Java ",), (" C++ ",), (" C ",)], schema=["programming_language"]

)

print('DataFrame before applying trim(): ')

df.show()

# using trim() function

df = df.select(trim('programming_language').alias('trim'))

print('DataFrame after applying trim(): ')

df.show()

Output

DataFrame before applying trim():

+--------------------+

|programming_language|

+--------------------+

| Python |

| Java |

| C++ |

| C |

+--------------------+

DataFrame after applying trim():

+------+

| trim|

+------+

|Python|

| Java|

| C++|

| C|

+------+upper(col)

The upper() function is used to convert the passed column into uppercase.it takes the column name as a parameter.

Example

from pyspark.sql.functions import upper

# using substring

df = df.select(upper('first_name').alias('upper'))

# displaying

df.show()

Output

+---------+

| upper|

+---------+

|VISHVAJIT|

| HARSH|

| PANKAJ|

| PRANJAL|

| RITIKA|

| DIYANSHU|

|PRATIKSHA|

| SHAILJA|

| VAIBHAV|

| HARNOOR|

| VAISHALI|

+---------+Useful PySpark Articles

- Convert PySpark DataFrame Column to List

- How to Write PySpark DataFrame to CSV

- How to Convert PySpark DataFrame to JSON

- How to Apply groupBy in Pyspark DataFrame

- Merge Two DataFrames in PySpark with the Same Column Names

- Merge Two DataFrame in PySpark with Different Column Names

- How to drop duplicate rows from PySpark DataFrame?

- How to Count Null and NaN Values in Each Column in PySpark DataFrame?

- How to convert PySpark Row To Dictionary

- PySpark Column Class with Examples

- PySpark Sort Function with Examples

- How to read CSV files using PySpark

- PySpark col() Function with Examples

- Fill None or Null Values in PySpark DataFrame

- How to change the Data Type of a Column in PySpark DataFrame?

- How to drop one or multiple columns from PySpark DataFrame?

👉 All PySpark SQL string functions has been written here.

Conclusion

I hope this article was very helpful to you. PySpark SQL string functions are one of the most commonly used functions, Whenever you require to work with string values you would have a requirement of PySpark string functions. You can also say, PySpark SQL string functions because all the functions have been written inside pyspark.sql.functions package.

If you found this article helpful, Please share and keep visiting for further PySpark tutorials.

Have a great day…

Happy coding……