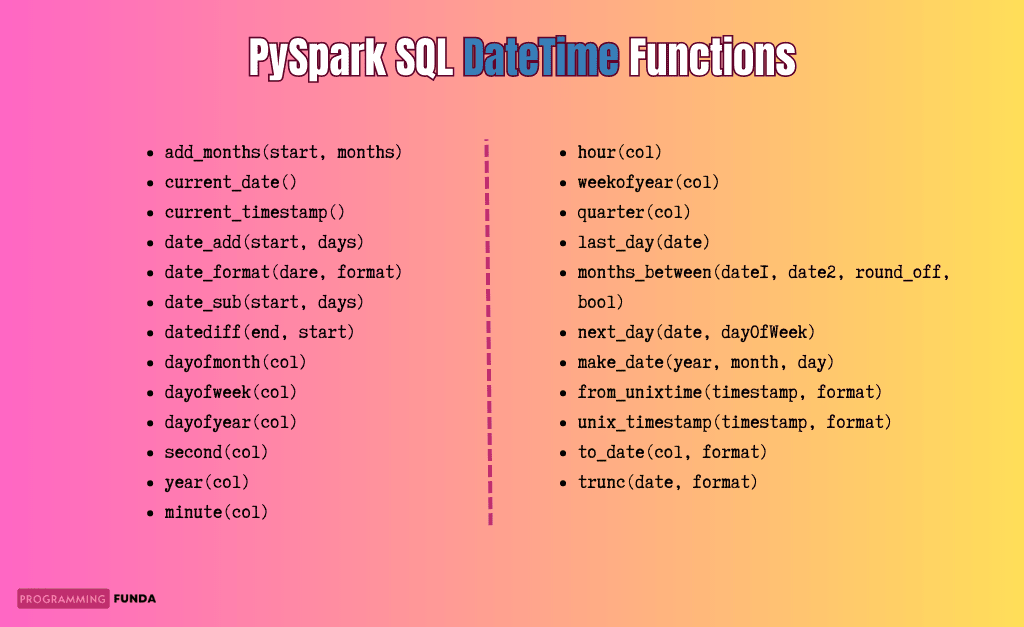

In this PySpark recipe, you will learn all about the PySpark SQL DateTime functions with the help of examples. As PySpark developers, we must have knowledge about the PySpark DateTime function because most of the time in real-life spark applications we have to deal with date-time values.PySpark provides various DateTime functions that are enough to handle any type of DateTime value.

To use all the PySpark DateTime functions we will have to import all functions from pyspark.sql.functions module because all the functions have been written in pyspark.sql.functions module.

Obviously, To work with PySpark SQL DateTime functions, we should have PySpark DataFrame so that we can apply functions on top of that DataFrame.

Headings of Contents

- 1 Creating PySpark DataFrame

- 2 PySpark SQL DateTime Functions

- 2.1 add_months(start, months)

- 2.2 current_date()

- 2.3 current_timestamp()

- 2.4 date_add(start, days)

- 2.5 date_format(dare, format)

- 2.6 date_sub(start, days)

- 2.7 datediff(end, start)

- 2.8 dayofmonth(col)

- 2.9 dayofweek(col)

- 2.10 dayofyear(col)

- 2.11 second(col)

- 2.12 year(col)

- 2.13 minute(col)

- 2.14 hour(col)

- 2.15 weekofyear(col)

- 2.16 quarter(col)

- 2.17 last_day(date)

- 2.18 months_between(date1, date2, round_off, bool)

- 2.19 next_day(date, dayOfWeek)

- 2.20 make_date(year, month, day)

- 2.21 from_unixtime(timestamp, format)

- 2.22 unix_timestamp(timestamp, format)

- 2.23 to_date(col, format)

- 2.24 trunc(date, format)

- 3 Conclusion

Creating PySpark DataFrame

For the sake of this article, I have created a sample PySpark data frame containing some records. We will use this data frame mostly throughout this article in order to apply DataTime functions.

from pyspark.sql import SparkSession

from pyspark.sql.functions import upper

# list of tuples

data = [

("1", "Vishvajit", "Rao", "2023-01-12"),

("2", "Harsh", "Goal", "2021-12-10"),

("3", "Pankaj", "Singh", "2020-06-29"),

("4", "Pranjal", "Rao", "2020-11-20"),

("5", "Ritika", "Kumari", "2019-11-26"),

("6", "Diyanshu", "Saini", "2023-01-01"),

("7", "Pratiksha", "Kaushik", "2018-07-10"),

("8", "Shailja", "Srivastava", "2020-11-22")

]

# column

column = [

"roll_number",

"first_name",

"last_name",

"date_of_admission",

]

# creating spark session

spark = SparkSession.builder.appName("www.programmingfunda.com").getOrCreate()

# creating DataFrame

df = spark.createDataFrame(

data=data, schema=column

)

# displaying

df.show()

Output

+-----------+----------+----------+-----------------+

|roll_number|first_name| last_name|date_of_admission|

+-----------+----------+----------+-----------------+

| 1| Vishvajit| Rao| 2023-01-12|

| 2| Harsh| Goal| 2021-12-10|

| 3| Pankaj| Singh| 2020-06-29|

| 4| Pranjal| Rao| 2020-11-20|

| 5| Ritika| Kumari| 2019-11-26|

| 6| Diyanshu| Saini| 2023-01-01|

| 7| Pratiksha| Kaushik| 2018-07-10|

| 8| Shailja|Srivastava| 2020-11-22|

+-----------+----------+----------+-----------------+Code Explanation

Let me explain the above code so that you can get more understanding of the PySpark code.

- First, I imported the SparkSession class from the pyspark.sql package.

- Second, I have defined a list of tuples and each tuple represents some information about the student like roll_number, first_name, last_name, and date_of_admission.

- Third, I have defined a Python list that contained column names.

- Fourth, I have created a spark session using SparkSession.builder.appName(“testing”).getOrCreate().

- SparkSession represents the class name defined inside the PySpark SQL package.

- builder is the attribute of the SparkSession class which has a Builder class to initiate the spark session.

- appName() is a method that is used to provide the name of the spark application, In my case the application name is www.programmingfunda.com.

- getOrCreate() is also a method that is used to get the existing spark session or create a new spark session if the spark session is not available.

- Fifth, I have used the createDataFrame() method in order to create a new PySpark DataFrame. I have passed the two arguments in createDataFrame(), First is a list of tuples, second is column names as a list. The createDataFrame() method returns a DataFrame.

- Sixth, I have to use the DataFrame show() method that is used to show the DataFrame-like table structure.

PySpark SQL DateTime Functions

Let’s explore all the above useful PySpark SQL DateTime functions with the help of the examples.

add_months(start, months)

This function is used to return the date after the start date. If the value of the month parameter is negative then the negative value will be deducted from the start date.

For instance, we know that, In the above created PySpark DataFrame, we have a column called date_of_admission and now I am about to add one month ahead to each date_of_admission column value using add_months() functions. The add_months() functions two parameters that is a start date and months to be extended. The value of months can be negative also.

Example

from pyspark.sql.functions import add_months

# using add_months function

df = df.select('first_name', add_months('date_of_admission', 1).alias('add_months'))

# displaying

df.show()

Output

+----------+----------+

|first_name|add_months|

+----------+----------+

| Vishvajit|2023-02-12|

| Harsh|2022-01-10|

| Pankaj|2020-07-29|

| Pranjal|2020-12-20|

| Ritika|2019-12-26|

| Diyanshu|2023-02-01|

| Pratiksha|2018-08-10|

| Shailja|2020-12-22|

+----------+----------+As you can see in the above output, How I have added one month ahead to each date of the date_of_admission column, You can compare it with the main PySpark DataFrame which we created starting of this article.

current_date()

This function is used to return the current date. To use this, we have to import it from the pyspark.sql.functions module. For example, I am about to add a new column in created PySpark DataFrame which will show the current date using the current_date() function.

Example

from pyspark.sql.functions import current_date

# using add_months function

df = df.withColumn('current_date', current_date())

# displaying

df.show()

Output

+-----------+----------+----------+-----------------+------------+

|roll_number|first_name| last_name|date_of_admission|current_date|

+-----------+----------+----------+-----------------+------------+

| 1| Vishvajit| Rao| 2023-01-12| 2023-04-30|

| 2| Harsh| Goal| 2021-12-10| 2023-04-30|

| 3| Pankaj| Singh| 2020-06-29| 2023-04-30|

| 4| Pranjal| Rao| 2020-11-20| 2023-04-30|

| 5| Ritika| Kumari| 2019-11-26| 2023-04-30|

| 6| Diyanshu| Saini| 2023-01-01| 2023-04-30|

| 7| Pratiksha| Kaushik| 2018-07-10| 2023-04-30|

| 8| Shailja|Srivastava| 2020-11-22| 2023-04-30|

+-----------+----------+----------+-----------------+------------+current_timestamp()

The current_timestamp() function is used to return the current timestamp as a result. For example, I am going to add a new column called current_timestamp in the created PySpark DataFrame.

Example:

from pyspark.sql.functions import current_timestamp

# using current_timestamp function

df = df.select('first_name', 'last_name', current_timestamp().alias('current_date'))

# displaying

df.show(truncate=False)

Output

+----------+----------+-----------------------+

|first_name|last_name |current_date |

+----------+----------+-----------------------+

|Vishvajit |Rao |2023-05-09 23:55:51.657|

|Harsh |Goal |2023-05-09 23:55:51.657|

|Pankaj |Singh |2023-05-09 23:55:51.657|

|Pranjal |Rao |2023-05-09 23:55:51.657|

|Ritika |Kumari |2023-05-09 23:55:51.657|

|Diyanshu |Saini |2023-05-09 23:55:51.657|

|Pratiksha |Kaushik |2023-05-09 23:55:51.657|

|Shailja |Srivastava|2023-05-09 23:55:51.657|

+----------+----------+-----------------------+date_add(start, days)

This function is used to add days in the start parameter which indicates the date in PySpark DataFrame.

For example, I am about to add 5 days in each date_of_admission date.

Example

from pyspark.sql.functions import date_add

# using date_add function

df = df.select(date_add('date_of_admission', 5).alias('date_add'))

# displaying DataFrame

df.show()

Output

+----------+

|date_add |

+----------+

|2023-01-17|

|2021-12-15|

|2020-07-04|

|2020-11-25|

|2019-12-01|

|2023-01-06|

|2018-07-15|

|2020-11-27|

+----------+date_format(dare, format)

The date_format() function is used to format the date/timestamp/string to the value of the string in a specified format which is provided as the second argument.

As you can see in the PySpark DataFrame, we have a date_of_admission column which stored the value in the form of yyyy-mm-dd and now I want to format all the dates of date_of_admission column in the form of yyyy/mm/dd.

Example

# using date_add function

df = df.select(date_format('date_of_admission', 'yyyy/MM/dd').alias('date_format'))

# displaying DataFrame

df.show()

Output

+-----------+

|date_format|

+-----------+

| 2023/01/12|

| 2021/12/10|

| 2020/06/29|

| 2020/11/20|

| 2019/11/26|

| 2023/01/01|

| 2018/07/10|

| 2020/11/22|

+-----------+date_sub(start, days)

The date_sub(start, days) function is used to return the date before the start date. Remember, if the value of the days parameter is negative then this amount will be added to start otherwise these will be subtracted from the start date.

Example

from pyspark.sql.functions import date_sub

# using date_sub function

df = df.select(date_sub('date_of_admission', 3).alias('date_sub'))

# displaying DataFrame

df.show()

Output

+----------+

| date_sub|

+----------+

|2023-01-09|

|2021-12-07|

|2020-06-26|

|2020-11-17|

|2019-11-23|

|2022-12-29|

|2018-07-07|

|2020-11-19|

+----------+datediff(end, start)

The datediff() function is responsible for returning the difference between two dates end and start. It returns a number of days between the start and end date.

Example

from pyspark.sql.functions import datediff, current_date

# using datediff function

df = df.select(datediff(current_date(), 'date_of_admission').alias('datediff'))

# displaying DataFrame

df.show()

Output

+--------+

|datediff|

+--------+

| 114|

| 512|

| 1041|

| 897|

| 1257|

| 125|

| 1761|

| 895|

+--------+dayofmonth(col)

The dayofmonth(col) function is used to return the day of the month. It takes the date as a parameter.

For example, I am about to return the day of the date_of_admission column.

Example

from pyspark.sql.functions import dayofmonth

# using dayofmonth function

df = df.select(dayofmonth('date_of_admission').alias('dayofmonth'))

# displaying DataFrame

df.show()

Output

+----------+

|dayofmonth|

+----------+

| 12|

| 10|

| 29|

| 20|

| 26|

| 1|

| 10|

| 22|

+----------+dayofweek(col)

The function dayofweek() is used to return the days week of the passed date column.

from pyspark.sql.functions import dayofweek

# using dayofweek function

df = df.select(dayofweek('date_of_admission').alias('dayofweek'))

# displaying DataFrame

df.show()

Output

+---------+

|dayofweek|

+---------+

| 5|

| 6|

| 2|

| 6|

| 3|

| 1|

| 3|

| 1|

+---------+dayofyear(col)

The function dayofyear(col) is used to extract the day of the year of the passed column name.

from pyspark.sql.functions import dayofyear

# using dayofyear function

df = df.select(dayofyear('date_of_admission').alias('dayofyear'))

# displaying DataFrame

df.show()

Output

+---------+

|dayofyear|

+---------+

| 12|

| 344|

| 181|

| 325|

| 330|

| 1|

| 191|

| 327|

+---------+second(col)

It extracts the second from the passed column name. The column name could be date/timestamp.

from pyspark.sql.functions import second

# using second function

df = df.select(second(current_timestamp()).alias('second'))

# displaying DataFrame

df.show()

Output

+------+

|second|

+------+

| 18|

| 18|

| 18|

| 18|

| 18|

| 18|

| 18|

| 18|

+------+year(col)

It extracts the year part from the passed column name. The column name could be date/timestamp.

from pyspark.sql.functions import current_timestamp, year

# using second function

df = df.select(year(current_timestamp()).alias('year'))

# displaying DataFrame

df.show()

Output

+------+

| year |

+------+

| 2023|

| 2023|

| 2023|

| 2023|

| 2023|

| 2023|

| 2023|

| 2023|

+------+minute(col)

It extracts the minute part from the passed column name. The column name could be date/timestamp.

from pyspark.sql.functions import current_timestamp, minute

# using minute function

df = df.select(minute(current_timestamp()).alias('minute'))

# displaying DataFrame

df.show()

Output

+------+

|minute|

+------+

| 26|

| 26|

| 26|

| 26|

| 26|

| 26|

| 26|

| 26|

+------+hour(col)

It extracts the hour part from the passed column name. The column name could be date/timestamp.

Example

from pyspark.sql.functions import current_timestamp, hour

# using hour function

df = df.select(hour(current_timestamp()).alias('hour'))

# displaying DataFrame

df.show()

Output

+----+

|hour|

+----+

| 16|

| 16|

| 16|

| 16|

| 16|

| 16|

| 16|

| 16|

+----+weekofyear(col)

The weekofyear(col) is responsible for extracting the week as an integer from the passed date. A week will always start from Monday.

from pyspark.sql.functions import weekofyear

# using weekofyear function

df = df.select(weekofyear('date_of_admission').alias('weekofyear'))

# displaying DataFrame

df.show()

Output

+----------+

|weekofyear|

+----------+

| 2|

| 49|

| 27|

| 47|

| 48|

| 52|

| 28|

| 47|

+----------+quarter(col)

The quarter(col) function is used to extract the quarter from the passed column name. The passed column name would be date/timestamp.

Example

from pyspark.sql.functions import quarter

# using quarter function

df = df.select(quarter('date_of_admission').alias('quarter'))

# displaying DataFrame

df.show()

Output

+-------+

|quarter|

+-------+

| 1|

| 4|

| 2|

| 4|

| 4|

| 1|

| 3|

| 4|

+-------+last_day(date)

The last_day(date) function is used to return the last day as the date from the passed date parameter.

from pyspark.sql.functions import last_day

# using last_day function

df = df.select(last_day('date_of_admission').alias('last_day'))

# displaying DataFrame

df.show()

Output

+----------+

| last_day|

+----------+

|2023-01-31|

|2021-12-31|

|2020-06-30|

|2020-11-30|

|2019-11-30|

|2023-01-31|

|2018-07-31|

|2020-11-30|

+----------+months_between(date1, date2, round_off, bool)

The function months_between() is used to return the number of months between two given dates daye1 and date2.

Output

from pyspark.sql.functions import months_between, current_date

# using months_between function

df = df.select(months_between(current_date(), 'date_of_admission').alias('months_between'))

# displaying DataFrame

df.show()

Output

+--------------+

|months_between|

+--------------+

| 3.83870968|

| 16.90322581|

| 34.29032258|

| 29.58064516|

| 41.38709677|

| 4.19354839|

| 57.90322581|

| 29.51612903|

+--------------+next_day(date, dayOfWeek)

It returns the first date which is later than the value of the date column based on the second argument which is dayOfWeek.The value of dayOfWeek will be any one of these “Mon”, “Tue”, “Wed”, “Thu”, “Fri”, “Sat”, “Sun”.

Example

from pyspark.sql.functions import next_day

# using next_day function

df = df.select(next_day('date_of_admission', 'Sat').alias('next_day'))

# displaying DataFrame

df.show()

Output

+----------+

| next_day|

+----------+

|2023-01-14|

|2021-12-11|

|2020-07-04|

|2020-11-21|

|2019-11-30|

|2023-01-07|

|2018-07-14|

|2020-11-28|

+----------+make_date(year, month, day)

The function make_date(year, month, day) is used to make a date object from the passed column year, month, and day.

Example

from pyspark.sql.functions import make_date

# using next_day function

df = df.select(next_day('date_of_admission', 'Sat').alias('next_day'))

# displaying DataFrame

df.show()

Output

+----------+

| next_day|

+----------+

|2023-01-14|

|2021-12-11|

|2020-07-04|

|2020-11-21|

|2019-11-30|

|2023-01-07|

|2018-07-14|

|2020-11-28|

+----------+from_unixtime(timestamp, format)

The function from_unixtime() is used to convert timestamp ( Number of seconds ) to string representing format. The default format of the format parameter is yyyy-MM-dd HH:mm:ss.

Example

from pyspark.sql.functions import from_unixtime, lit

# Set local timezone for America Los Angles

spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

# adding default unixtime value to unixtime column

df = df.withColumn("unixtime", lit(1683455705))

# convert unixtime value to string representation value

df = df.select('unixtime', from_unixtime('unixtime').alias('unixtime'))

# displaying DataFrame

df.show()

Output

+----------+-------------------+

| unixtime| unixtime|

+----------+-------------------+

|1683455705|2023-05-07 03:35:05|

|1683455705|2023-05-07 03:35:05|

|1683455705|2023-05-07 03:35:05|

|1683455705|2023-05-07 03:35:05|

|1683455705|2023-05-07 03:35:05|

|1683455705|2023-05-07 03:35:05|

|1683455705|2023-05-07 03:35:05|

|1683455705|2023-05-07 03:35:05|

+----------+-------------------+unix_timestamp(timestamp, format)

The function unix_timestamp() is used to convert string date time with given patterns (‘yyyy-MM-dd HH:mm:ss’, by default) into Unix timestamp ( In seconds ) using the default time zone and default code.

Example

from pyspark.sql.functions import unix_timestamp, current_timestamp

# Set local timezone for America Los Angles

spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

# adding a new column date with current timestamp

df = df.withColumn("date", current_timestamp())

# convert current timestamp to unix timestamp ( in seconds )

df = df.select('date', unix_timestamp('date').alias('unix_timestamp'))

# displaying DataFrame

df.show(truncate=False)

Output

+-----------------------+--------------+

|date |unix_timestamp|

+-----------------------+--------------+

|2023-05-07 04:10:54.466|1683457854 |

|2023-05-07 04:10:54.466|1683457854 |

|2023-05-07 04:10:54.466|1683457854 |

|2023-05-07 04:10:54.466|1683457854 |

|2023-05-07 04:10:54.466|1683457854 |

|2023-05-07 04:10:54.466|1683457854 |

|2023-05-07 04:10:54.466|1683457854 |

|2023-05-07 04:10:54.466|1683457854 |

+-----------------------+--------------+to_date(col, format)

The function to_date() is used to convert passed col into the specified format. This function takes col and format as parameters.

Example:

from pyspark.sql.functions import to_date, current_timestamp

# adding a new column date with current timestamp

df = df.withColumn("date", current_timestamp())

# using to_date() function

df = df.select('date', to_date('date').alias('to_date'))

# displaying DataFrame

df.show(truncate=False)

Output

+-----------------------+----------+

|date |to_date |

+-----------------------+----------+

|2023-05-07 16:50:11.641|2023-05-07|

|2023-05-07 16:50:11.641|2023-05-07|

|2023-05-07 16:50:11.641|2023-05-07|

|2023-05-07 16:50:11.641|2023-05-07|

|2023-05-07 16:50:11.641|2023-05-07|

|2023-05-07 16:50:11.641|2023-05-07|

|2023-05-07 16:50:11.641|2023-05-07|

|2023-05-07 16:50:11.641|2023-05-07|

+-----------------------+----------+trunc(date, format)

The function truc() is used to truncate the part of the date by specified format. The value of format could be ‘year’, ‘yyyy’, ‘yy’ to truncate by year, or ‘month’, ‘mon’, ‘mm’ to truncate by month Other options are: ‘week’, ‘quarter’.It returns the truncated date.

Example

from pyspark.sql.functions import trunc

# truncate month from date_of_admission column

df = df.select('date_of_admission', trunc('date_of_admission', 'mm').alias('trunc'))

# displaying DataFrame

df.show(truncate=False)

Output

+-----------------+----------+

|date_of_admission|trunc |

+-----------------+----------+

|2023-01-12 |2023-01-01|

|2021-12-10 |2021-12-01|

|2020-06-29 |2020-06-01|

|2020-11-20 |2020-11-01|

|2019-11-26 |2019-11-01|

|2023-01-01 |2023-01-01|

|2018-07-10 |2018-07-01|

|2020-11-22 |2020-11-01|

+-----------------+----------+PySpark Related Articles

- Convert PySpark DataFrame Column to List

- How to Write PySpark DataFrame to CSV

- How to Convert PySpark DataFrame to JSON

- How to Apply groupBy in Pyspark DataFrame

- Merge Two DataFrames in PySpark with the Same Column Names

- Merge Two DataFrame in PySpark with Different Column Names

- How to drop duplicate rows from PySpark DataFrame?

- How to Count Null and NaN Values in Each Column in PySpark DataFrame?

- How to convert PySpark Row To Dictionary

- PySpark Column Class with Examples

- PySpark Sort Function with Examples

- How to read CSV files using PySpark

- PySpark col() Function with Examples

- Fill None or Null Values in PySpark DataFrame

- How to change the Data Type of a Column in PySpark DataFrame?

- How to drop one or multiple columns from PySpark DataFrame?

- PySpark SQL String Functions with Examples

👉 PySpark DateTime Functions Official Documentation:- Click Here

Conclusion

So, In this article, We have seen Python SQL DateTime functions with the help of examples. I hope the tutorial was pretty simple and straightforward. If you are working on any real-life PySpark application then most of the time you may have to work with DateTime-related tasks.

PySpark Provides numerous functions to work with DateTime, You can format the DateTime, truncate the part of DateTime, Add and Subtract Year, Month, and Day in the Date, etc. Remember, all the Python functions have been written inside pyspark.sql.functions module that’s why you have to import all the functions from that module.

If you found this article helpful, Please share and keep visiting for further PySpark tutorials.

Thanks for taking the time to read this article…

Have a nice day…..